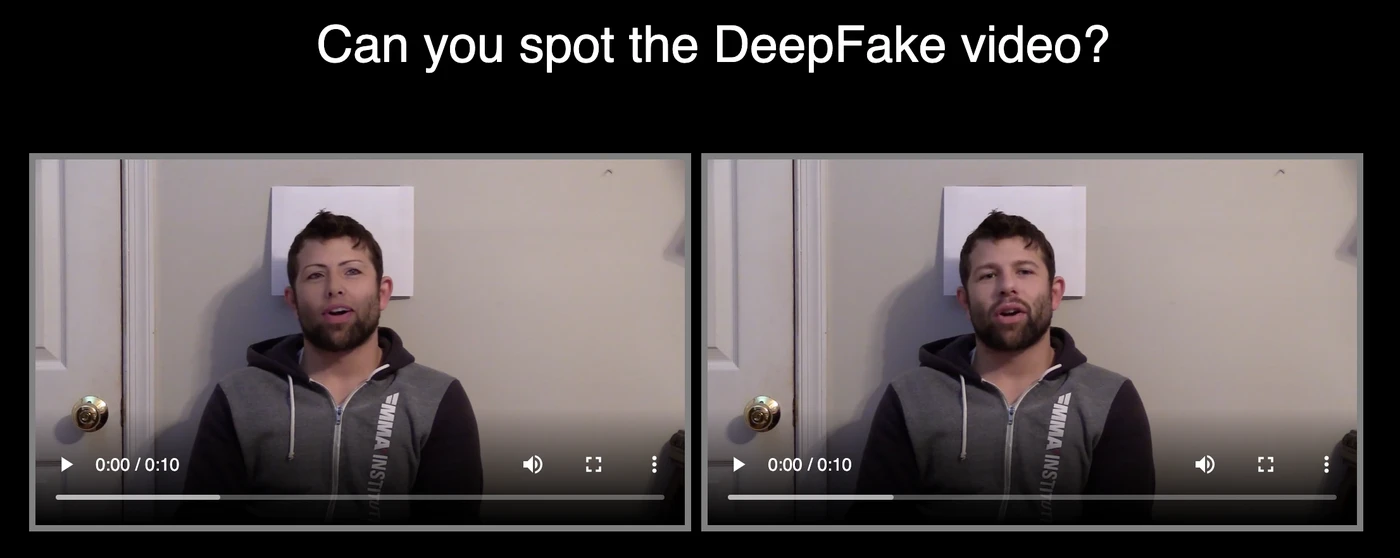

How To Spot A Deepfake Video Or Image

Deepfakes have changed from obvious fakes to nearly perfect synthetic media that can fool even trained observers. As generative adversarial networks and diffusion models keep improving, the race between creation and detection gets more intense. Understanding both manual inspection methods and automated detection systems has become essential for anyone working with visual media verification.

At ABXK.AI, we focus on AI detection across multiple media types. Our AI Text Detector already helps identify AI-generated content, and we are expanding our detection capabilities to include image and video analysis. This article explains the technical foundations that power modern deepfake detection.

What Humans Can Spot

Visual Artifacts and Inconsistencies

The easiest detection method involves looking at visual artifacts that deepfake generators struggle to copy correctly. Look for asymmetries in facial features, especially around the eyes and mouth where complex muscle movements happen. Deepfakes often show subtle warping at face boundaries, particularly near hairlines and jaw edges where the fake face meets the original background.

Lighting problems provide another strong clue. Pay attention to how light reflects off different parts of the face - deepfakes often show mismatched lighting directions or wrong shadow placement. The skin texture may look unnaturally smooth or contain repeating patterns that suggest algorithmic generation rather than natural pores and small imperfections.

Biological Signal Problems

Human biology creates subtle patterns that deepfake algorithms have historically failed to copy accurately. Eye blinking is one of the most reliable signs - real humans blink 15-20 times per minute with specific timing patterns, while early deepfakes showed abnormal blinking rates because training datasets mostly contained open-eye images. Modern deepfakes have improved this aspect, but the timing and coordination of blinks with speech patterns still show inconsistencies.

Watch for breathing patterns and small movements. Real humans display continuous small adjustments in posture, subtle chest movements from breathing, and natural head movements. Deepfakes often lock these movements or create unnaturally stable footage. The pulse visible in facial skin through remote photoplethysmography also provides detection clues - real videos show periodic color changes synchronized with heartbeat, which deepfakes disrupt or remove entirely.

Technical Detection Systems

Convolutional Neural Network Approaches

CNN-based detectors form the foundation of most automated deepfake detection systems. These networks analyze spatial features across multiple scales to identify manipulation artifacts. The XceptionNet architecture has become particularly popular, using depthwise separable convolutions that efficiently capture subtle forgery traces.

The detection process works by training CNNs on large datasets containing both real and manipulated images. During training, the network learns to recognize compression artifacts, color inconsistencies, and boundary problems introduced by face-swapping algorithms. The key lies in the intermediate layers - rather than just classifying images, these layers extract feature maps highlighting regions with unusual statistical properties. For example, upsampling operations used in GAN generators create specific frequency patterns that CNNs can learn to detect.

Multi-scale analysis improves detection by examining images at different resolutions at the same time. Deepfakes often show inconsistencies across scales - they might look convincing at normal viewing distance but reveal artifacts when examined at higher or lower resolutions. CNNs process pyramid-structured inputs to capture these multi-scale differences effectively.

Frequency Domain Analysis

Frequency analysis uses a fundamental weakness in generative models: their inability to perfectly copy natural image frequency distributions. Real photographs contain specific frequency signatures created by camera sensors, lenses, and natural light physics. Deepfakes generated through neural networks produce different frequency characteristics.

The detection process applies Discrete Fourier Transform or Discrete Cosine Transform to convert images from spatial domain to frequency domain. Real images show smooth frequency spectra with gradual transitions between high and low frequencies. Deepfakes, however, show irregular patterns - sharp breaks in frequency space, abnormal energy concentrations, or missing frequency components that natural images contain.

Combining frequency analysis with spatial CNNs creates hybrid detectors with better performance. These systems extract frequency features through transform operations, then feed both frequency and spatial features into parallel neural network branches. A fusion layer combines the branches, allowing the model to use both types of evidence at the same time. This approach works especially well against high-quality deepfakes that minimize visible spatial artifacts.

Transformer-Based Detection

Vision transformers have changed deepfake detection by capturing long-range dependencies that CNNs miss. Unlike CNNs that process local neighborhoods, transformers divide images into patches and analyze relationships between distant patches through self-attention mechanisms.

The architecture works by dividing input images into fixed-size patches (typically 16×16 pixels), then flattening and embedding each patch into a vector. These patch embeddings, combined with positional encodings, feed into multiple transformer encoder layers. Each layer contains multi-head self-attention that computes attention scores between all patch pairs, identifying which regions relate to which others across the entire image.

For deepfake detection, this global view is crucial. Face manipulation algorithms often create subtle inconsistencies between distant facial regions - for example, lighting on the forehead might not match lighting on the chin. Transformers excel at detecting these long-range inconsistencies through their attention mechanisms. The attention weights themselves provide interpretability, showing which image regions the model considers suspicious.

Temporal transformers extend this concept to video sequences. They analyze not just spatial relationships within frames, but temporal relationships between frames. This captures artifacts in motion patterns, expression transitions, and frame-to-frame consistency that single-frame analysis misses.

Biological Signal Extraction Systems

Remote photoplethysmography (rPPG) represents one of the most advanced deepfake detection approaches. This technique extracts heartbeat signals from facial videos by measuring subtle skin color variations caused by blood flow. The detection pipeline involves several technical stages.

First, the system performs facial landmark detection to identify skin regions suitable for rPPG measurement - typically the forehead, cheeks, and nose. It extracts color channel values (particularly green channel, which shows strongest pulsatile signals) from these regions across video frames, creating time-series signals. Signal processing steps including bandpass filtering (0.7-4 Hz for normal heart rates) and detrending remove noise and lighting changes.

The extracted rPPG signals then go through frequency analysis through Fast Fourier Transform to identify the dominant frequency corresponding to heart rate. Real videos produce clear periodic signals with consistent frequency and amplitude patterns. Deepfakes, however, show irregular or absent periodicity because face-swapping disrupts the subtle color changes that encode pulse information.

Advanced rPPG detectors use multi-scale spatial-temporal analysis. They extract rPPG signals from multiple facial regions at the same time and create two-dimensional PPG maps where one dimension represents spatial locations and the other represents time. Neural networks then analyze these maps to identify manipulation-specific patterns - different deepfake methods create distinctive disruption signatures in rPPG patterns.

Multi-Modal Detection Systems

Modern detectors combine multiple detection methods to achieve strong performance across different deepfake types. These systems integrate visual features, frequency analysis, biological signals, and sometimes audio clues into unified frameworks. The architecture typically uses separate specialist networks for each method, then combines their outputs through learned combination strategies.

The fusion process itself uses advanced techniques. Early fusion joins features from different methods before classification. Late fusion combines prediction scores from separate method-specific classifiers. Attention-based fusion learns to weight different methods dynamically based on their reliability for each specific input - if biological signals are weak in a particular video, the system automatically relies more heavily on visual and frequency features.

This multi-modal approach provides resistance against adversarial attacks and evolving deepfake techniques. When generators learn to avoid one detection method, others often still expose the manipulation.

The Detection Arms Race

Deepfake detection remains an evolving challenge as generative models rapidly advance. Current detectors show reduced accuracy when tested on datasets different from their training data, indicating overfitting to specific manipulation methods. Diffusion models and newer generative approaches create deepfakes with different artifact patterns than GAN-based methods, requiring continuous detector updates.

The most promising direction involves developing detectors that identify fundamental impossibilities in forged content rather than specific generator artifacts - biological signals, physical light properties, and natural motion patterns that any synthetic system struggles to perfectly copy. These approaches provide more robust defenses as deepfake technology continues evolving.

ABXK.AI Detection Focus

At ABXK.AI, we are building detection capabilities across text, image, and video content. Our AI Text Detector project demonstrates how machine learning can identify AI-generated content through statistical analysis and pattern recognition. The same principles that power text detection - analyzing subtle patterns that distinguish human-created from machine-generated content - apply to visual media detection.

We believe that reliable AI detection will become increasingly important as generative AI becomes more capable. By understanding both the technical methods and practical limitations of deepfake detection, organizations can better protect themselves against synthetic media threats while making informed decisions about verification processes.

Explore our projects to learn more about our work in AI detection and security.